I started my career in the field of release management and subsequently CI/CD (Continuous Integration/Continuous Deployment). When I joined TriNimbus some 3.5 years ago to focus on AWS solutions, a number of people asked me, “Why the drastic transition?” My answer was “because it makes sense, because Cloud without CI/CD is like buying a fancy car without a key.” The past few years working with a diverse set of projects and clients have convinced me more about my initial answer; I will argue with more conviction than ever that operating on cloud cannot be done adequately without proper CI/CD.

Cloud and Infrastructure Codification

The longer version of the answer is heavily related to the advantages and benefits of cloud. As AWS has eloquently put it, cloud promotes economy of scale, removes wild guessing of capacity, and converts operational costs from capital costs. However, all this is predicated on some methodical—and timely—way to trigger/map changes to the actual user demand. If (in the extreme case) the resources are provisioned with step-by-step manual entry and clicks, it is very difficult to provision the resources based on needs in a timely fashion, not to mention how error-prone and soul-crushingly tedious the manual provisioning is.

What impressed me tremendously about AWS—and still does—is how extensive the AWS APIs are. Every operation possible on the console can be made by calling the AWS API (in fact, the options of the individual AWS resources used to be exposed more extensively through the API than through the AWS console. That being said, the AWS console—at least based on my observation—is catching up fast). Armed with the different flavors of SDKs, deployment of AWS infrastructure largely becomes a coding exercise. Instead of spending the same amount of time and exerting the same (or even increasing) amount of risk to deploy an environment every time, deployment becomes a continuously simple exercise. Especially after separating environment-specific configuration and environment-agnostic code, the same code base can be used to deploy multiple stacks of infrastructure into different accounts and different regions. While AWS CloudFormation is the native service that manages the provisioning of AWS resources, there are other third-party tools (e.g. my personal favourite: Terraform) that can be used to manage AWS resources in a repeatable and idempotent manner.

But as the infrastructure becomes increasingly codified, the same discipline to validate and test the infrastructure source code—just like any other pieces of source code—is well applicable too. More often than not, AWS infrastructure is not just a bunch of compute resources that are provisioned independently like a number of VMs on which applications run, but they are a list of concise resources that serve specific needs of an ideally highly available and scalable stack. As an example: a load balancer is often placed in front of a group of (hopefully autoscaling-enabled 🙂 ) EC2 instances that host the services. While the resource can be provisioned independently, the security groups (equivalent of “firewalls”) need to be configured in a way such that only the ports required by the specific applications are opened in order to make the stack functional while following the security best practice of minimising the attack surface. It is practically impossible to provision a few static load balancers and instances and expect them to not evolve along with the needs and growth of the applications. All in all, when running on the cloud, the infrastructure is a first-class component of the product that grows hand-in-hand with the other services and applications that form the full product. Meanwhile, the infrastructure is the foundational layer on which all the services and applications of the product depend.

Infrastructure CI/CD

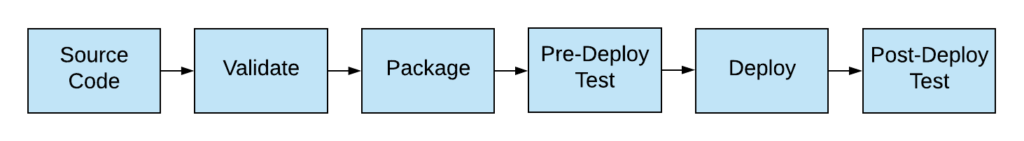

Image 1: the basic workflow of a infrastructure CI/CD pipeline

Because of the necessity to maintain the discipline to produce high-quality infrastructure, the CI/CD concept should also be applied to infrastructure source code. The infrastructure code is stored in a source code repository. Every change into the version control triggers a pipeline that validates the code (e.g. format, syntax check, API check) before it gets packages (even a simple compressed zip) and be stored in the artifact storage. During deployment—in this case, the creation of the infrastructure—corresponding checks are made before and after the infrastructure update to ensure the integrity of the infrastructure and the validity of the source code.

Traditional CI/CD tools (e.g. Jenkins, TeamCity, Bamboo) can be used to run the infrastructure CI/CD, to raise the infrastructure either from scratch or update from a specific baseline snapshot. However, AWS (thankfully) comes up with its own tools that allow the support of infrastructure CI/CD in a cost-effective and efficient manner.

Related: Implementing CI/CD pipelines is an important strategy for post-migration success. Learn about the other 4 strategies in the “5 Post Migration Strategies to Increase Your Cloud ROI” eBook. Download Now.

Infrastructure CI/CD with the AWS Developer Tools

The AWS Developer Tools set consists of:

- AWS CodeBuild

- AWS CodePipeline

- AWS CodeDeploy

- AWS CodeCommit

- AWS CodeStar

- AWS Cloud9

- AWS X-Ray

Together they form an end-to-end (from source code to deployment) experience that are extensible to different environments. That being said, the infrastructure CI/CD pipeline shown earlier can be implemented in particular by AWS CodeCommit, AWS CodeBuild, AWS CodeDeploy and AWS CodePipeline.

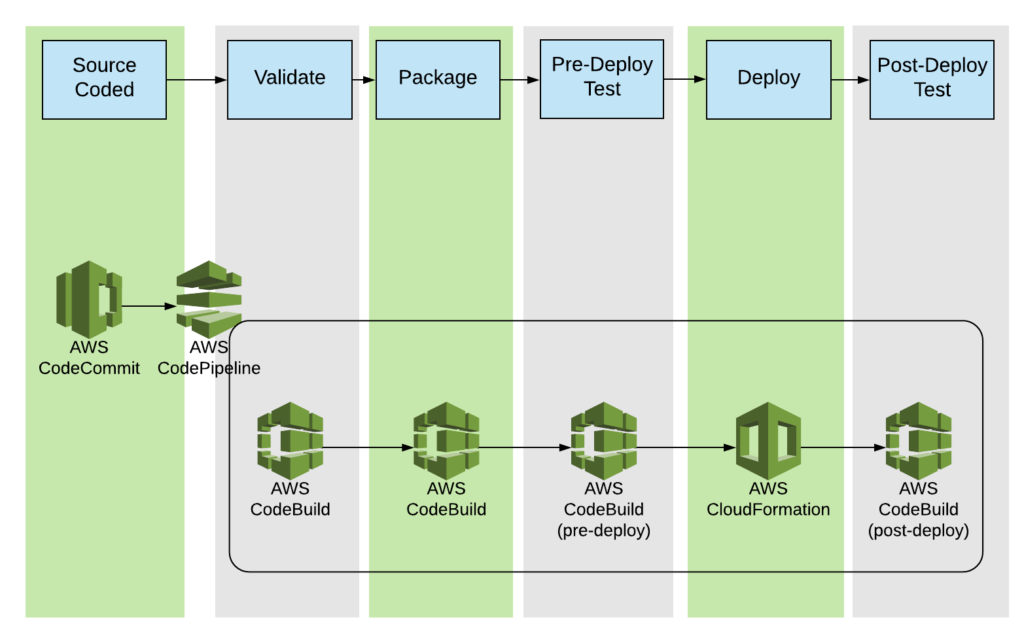

Figure 2: How AWS Developer Tools can be used to implement Infrastructure CI/CD pipeline

In Figure 2:

- AWS CodeCommit acts as the version control. Given that AWS CodeCommit supports the Git protocol, anyone with Git experience can adopt the service easily.

- AWS CodeBuild acts as the managed build system; in other words, AWS CodeBuild is like a Jenkins server fully managed by AWS (it is actually flexible enough to also act as a slave to a Jenkins master). Using YAML syntax, it is easy to configure the exact build steps in a build workflow. Users can choose to run their builds with either AWS-provided or customized/user-created docker images, therefore supporting a wide range of technologies. Because of its versatility, in the pattern AWS CodeBuild is used in multiple steps: in the initial syntax check, packaging step, configuration of the environment, and pre-/post- deployment validations.

- AWS CodePipeline is however the backbone scheduling mechanism that coordinates the steps within the pipeline. It has direct support for AWS CodeBuild, AWS CodeDeploy, AWS Elastic Beanstalk, AWS CloudFormation, AWS OpsWorks, Amazon ECS, and AWS Lambda, while triggers for AWS CodePipeline can be either CodeCommit or AWS S3.

While the individual tools can be implemented by non-AWS tools—for example, AWS CodeCommit can be easily replaced by GitHub—the AWS developer tools have the advantage of being easily scalable.

One other advantage is security, as IAM users, roles and policies are supported by all the tools listed above. This allows granular security permissions to be controlled to ensure the tools can only do what is expected. Also, all the calls are logged in CloudTrail, hence the usual auditing and logging capability within AWS is uniformly supported by the infrastructure CI/CD pipeline.

Finally, all these tools have got very attractive price point. For example, an AWS CodePipeline costs only $1/month to run. The pricing is attractive on its own, even when one forgets about the extra savings because of the reduced operational overhead and the “automagical” scalability of the service.

Summary

The above is only a high-level introduction to running CI/CD pipelines to provision cloud infrastructure, and how AWS development tools can be used to implement such CI/CD workflows in a highly scalable, secure and cost-effective manner.

If you are looking for more architectural strategies that can generate significant financial and service quality dividends for your organization on AWS, please download our free eBook “5 Post Migration Strategies to Increase Your Cloud ROI” below

[button link=”https://insights.onica.com/five-post-cloud-migration-strategies-to-increase-roi?utm_source=website&utm_medium=blog” color=”black” newwindow=”yes”] Download eBook[/button]